From rule-based systems to complex machine learning (ML) and deep learning models that outperform humans in image recognition, natural language processing (NLP), medical diagnosis, and financial forecasting, artificial Intelligence (AI) has rapidly evolved. However, as innovation and development continue, these models grow in complexity and begin behaving like “black boxes”. As a result, they produce highly accurate predictions without providing clear explanations of how or why those predictions were made.

This lack of transparency is very challenging, especially in high-stakes domains such as healthcare, finance, law, autonomous systems, and software testing. Hence, all stakeholders, including developers, business leaders, regulators, and end users, must understand AI decisions to trust, validate, debug, and govern these systems. This is the Explainable AI (XAI).

| Key Takeaways: |

|---|

|

This article explores the concept of Explainable AI, its importance, key principles, types of explainability, and the most widely used explainability techniques in modern AI systems.

What is Explainable AI (XAI)?

Explainable AI (XAI) is an area of AI that focuses on designing models and methods that enable humans to understand, interpret, and trust AI system outputs. The reasoning behind an AI model’s predictions, recommendations, or actions is human-understandable.

- Traditional AI answers “What is the prediction?”

- XAI answers “Why this prediction?” and “How was it made?”

It should be noted that AI models, in the case of XAI, are generally not simple. However, there are mechanisms that are either built into the model or applied later on. They clarify the decision-making processes.

Why is Explainable AI Important?

- Trust and Adoption: Users (mostly humans) won’t trust AI if they don’t understand the outputs, decisions, or actions. When an AI model can explain its outputs, users will accept it and rely on its recommendations or actions.

- Accountability and Compliance: Regulatory standards such as GDPR, AI Act, and other industry and compliance standards expect transparency in automated decision-making. Using XAI, organizations can demonstrate compliance.

- Debugging and Model Improvement: With explainable AI models, developers can:

- Detect bias or unfairness in decision-making.

- Identify data leakage in the training data.

- Understand feature importance.

- Improve model robustness and performance.

Without proper explanations, diagnosing errors in complex models is challenging. - Ethical AI and Fairness: AI models that do not explain their actions can unintentionally reinforce biases present in training data. This will lead to wrong or biased decisions.

XAI identifies discriminatory patterns and promotes the development of fair and responsible AI systems.

- Human-AI Collaboration: AI is not meant to replace humans but assist them with decision-making. To achieve this, humans expect AI models to provide explanations so they can collaborate effectively with them and make better decisions. XAI makes this possible.

Key Concepts in Explainable AI

XAI is mainly based on the concept of explainability. It is important to understand the concept in terms of interpretability.

Interpretability vs Explainability

- Interpretability explains how easily a human can understand a model’s internal workings directly. For example, when a linear regression model is given, when we say the user interprets the model, he/she is actually understanding its internal workings.

- Explainability refers to the techniques used to explain the behavior of any model, including black-box models. Hence, if we have a black-box model, using explainability, we expect to understand its behavior and ultimately why it produced this output.

An interpretable model is inherently explainable, but vice versa is not true.

Types of Explainability

XAI is categorized by scope (global vs. local), model dependency (model-specific vs model-agnostic), and timing (intrinsic vs. post-hoc). The detailed classification is as follows:

1. Scope: Global vs Local Explainability

Depending on the scope of XAI, it has two types:

- Global XAI explains overall model behavior.

- It answers the question: Which features generally matter most across all data?

- Global XAI is useful for governance, auditing, and high-level understanding.

- Local XAI explains individual, instance-level predictions.

- It answers the question: Why was this specific prediction made?

- Local explainability is useful for debugging, user feedback, and decision support.

2. Model Dependency: Model-Specific vs Model-Agnostic Explainability

Based on model dependency, XAI is classified as:

- This model includes techniques designed for specific model types (e.g., decision trees and linear models).

- The techniques are often more precise and computationally efficient.

- Model-agnostic XAI techniques, such as LIME and SHAP, can be applied to any model.

- They analyze input-output pairs without needing access to the model’s internal structure. They treat the model as a black box.

- An XAI model is more flexible but sometimes approximate.

3. Timing/Model Type: Intrinsic vs. Post-hoc

There are two types of XAI models, depending on timing or model type:

- These are inherently understandable models, such as decision trees or linear regression.

- The structure directly reveals the decision process.

- Post-hoc explanations for models include techniques applied to complex “black-box” models (e.g., deep learning).

- The behavior is explained after training.

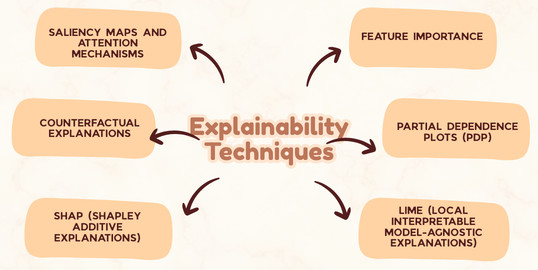

Explainability Techniques for Black-Box Models

1. Feature Importance

Feature importance techniques identify which input features most influence the model’s output and contribute most to its predictions. This XAI technique is often used to detect bias.

A drop in performance is measured by shuffling a feature’s values. Feature importance techniques are commonly used in tree-based models such as Random Forests and XGBoost.

The primary benefit of feature importance techniques is that they are simple and intuitive. They are also useful for global understanding.

However, techniques can mislead when features are correlated. Feature importance techniques generally do not explain individual predictions well.

2. Partial Dependence Plots (PDP)

PDP shows how changing a single feature affects the model’s prediction when other features are constant. PDP is commonly used for understanding feature influence trends. It is also used for model validation and sanity checks.

However, PDP has a drawback that it assumes feature independence and is less reliable with correlated features.

A variation of PDP is called Individual Conditional Expectation (ICE) Plots, and they show the effect of a feature on individual data points rather than the average. ICE Plots are useful in local analysis.

3. LIME (Local Interpretable Model-Agnostic Explanations)

LIME creates a simple, interpretable model (like linear regression) around a specific prediction to explain how the complex model behaves locally. It approximates the back-box model locally using this model and explains individual predictions.

This XAI technique works by slightly modifying the input data near a prediction. It then observes the changes in output to fit a local linear model.

LIME is a model-agnostic approach and is easy to understand. Its output, however, can vary across runs, and it is only locally valid.

4. SHAP (SHapley Additive exPlanations)

SHAP is one of the most popular XAI methods, assigning each feature an importance value for a given prediction. It is a game-theoretic approach and offers both local (single data point) and global explanations.

The key idea behind SHAP is that each feature is treated as a “player” contributing to the prediction. SHAP values represent fair contribution scores

SHAP is a consistent and theoretically sound technique that supports both global and local explanations.

The disadvantage of SHAP is that it is computationally expensive for large models and can be complex to interpret for non-technical users.

5. Counterfactual Explanations

The counterfactual explanation technique shows the smallest change in the input required to alter the model’s output. It answers questions like: “What is the smallest change needed to get a different outcome?”

The counterfactual explanation technique is highly intuitive and useful for end users and decision support.

The technique is at a disadvantage when it comes to finding realistic counterfactuals and is computationally complex.

6. Saliency Maps and Attention Mechanisms

This technique is common in deep learning models, especially for image and text data. Saliency maps highlight regions of input that most influence predictions, and attention mechanisms show which part of the input the model focuses on.

Saliency maps and attention mechanisms are used in image classification and in NLP models such as transformers.

However, the explanations provided by this technique may be noisy, and they do not always reflect true causality.

Explainable AI (XAI) Applications

XAI is critical for enhancing transparency, trust, and accountability in ML, particularly in high-stakes fields. Key applications include medical diagnostics, financial risk assessment and fraud detection, autonomous vehicle decision-making, and regulatory compliance in legal or hiring decisions.

- Healthcare & Medicine: Doctors and medical professionals use XAI to understand the rationale behind AI-driven diagnoses and medical imaging, improving patient care and trust. XAI assists in predicting patient outcomes, such as identifying disease risk factors, enabling more personalized treatment plans.

- Financial Services: XAI is used for credit scoring, loan approvals, and fraud detection. Using XAI, financial institutions can explain to customers why a particular decision was made. It also helps meet regulatory requirements and increases user confidence.

- Autonomous Vehicles: XAI provides transparency into the decision-making process of self-driving cars by explaining, for instance, why the vehicle stopped. This enhances safety and public trust.

- Industrial & Energy Optimization: Manufacturing industries use XAI for predictive maintenance, optimizing industrial processes, and analyzing building energy consumption to improve efficiency.

- Human Resources & Law: In the human resources and law fields, AI models are used to evaluate job applications or legal cases, respectively. These models use XAI to reduce bias, ensure fairness, and make decisions interpretable for human oversight.

- Cybersecurity: XAI models can identify and explain potential security threats, helping security analysts understand and respond to risks more efficiently.

Challenges and Limitations of XAI

- Trade-off Between Accuracy and Interpretability: There is a direct conflict between a model’s accuracy and its interpretability. Highly accurate models such as deep neural networks and large language models are complex and hard to explain. Simpler, interpretable models like decision trees, on the other hand, often underperform.

- Explanation Quality vs User Understanding: Explanations generated by XAI techniques and algorithms are often technical and, therefore, not meaningful to non-technical users, such as regulators, customers, or business stakeholders.

- Risk of Misleading Explanations: Many XAI methods are post-hoc (applied after training) and fail to fully or accurately capture the underlying model’s complex reasoning process.

- Scalability and Performance Overhead: Some XAI techniques are not able to handle the scale of modern, high-dimensional datasets. Organizations may choose less accurate models to achieve explainability, potentially lowering decision-making quality. Thus, there is a scalability and performance overhead for XAI models.

- Lack of Standard Evaluation Metrics: XAI lacks universal, industry-standard metrics for what constitutes a “good” or “sufficient” explanation, making evaluation subjective and difficult.

- Embedded Human Bias: XAI can reveal biases in the training data or algorithms, but not inherently fix them. Moreover, these biases are often introduced by humans.

- Data Exposure: XAI models may generate detailed explanations, inadvertently exposing sensitive training data or confidential information, thereby creating data privacy and security vulnerabilities.

The Future of Explainable AI

- Built-in explainability in applications by design

- Human-centered explanations that are more understandable

- Regulatory-driven transparency so that explainability can be measured with a standard metric

- Domain-specific explainability

- Integration with AI governance and MLOps

Summary

With the discussion above, we can say that Explainable AI (XAI) plays a crucial role in making AI systems transparent, trustworthy, and responsible. It essentially bridges the gap between complex algorithms and human understanding by explaining how models make decisions.

From inherently interpretable models to advanced techniques like feature importance, SHAP, LIME, and counterfactual explanations, XAI offers a diverse toolkit for understanding and governing AI systems. With the growing importance of ethical AI, regulatory compliance, and human-AI collaboration, XAI will remain a core pillar of modern artificial intelligence.

Related Reads:

- What Is AIOps? A Complete Guide to Artificial Intelligence for IT Operations

- What is Adversarial Testing of AI?

- What Is AI Evaluation? Measuring the Performance and Reliability of Artificial Intelligence Systems

- Free AI Testing Tools

- AI Agents in Software Testing

- Generative AI in Software Testing

- Top 5 AI Testing Tools – Overview