Artificial Intelligence (AI) is influencing nearly every sector, from healthcare and finance to education and entertainment. But as AI systems grow in complexity and sophistication, there is an underlying question: How do we know whether these systems are doing what they should?

The solution is called AI evaluation – often known as AI Eval.

AI evaluation (AI Eval) is a systematic procedure that aims at quantifying, analyzing, and interpreting the capabilities or properties of AI models. It’s not enough to evaluate AI once, but it is an ongoing function in ensuring accuracy, safety, ethicality, and alignment with human expectations.

This article explores AI evaluation in depth, covering its purpose, methods, metrics, challenges, and future directions.

Understanding AI Evaluation

AI evaluation is a structured process of assessing the performance and behavior of an AI system/model based on specific objectives, data, and metrics. It determines how well an AI model meets the requirements of accuracy, generalization, robustness, interpretability, and ethical compliance.

In simple terms, AI evaluation answers an essential question for us: “Is this AI doing what we expect it to do and is it doing it well enough for users to trust it?”. Along with this, it also answers questions such as:

- Is the AI model performing accurately on unseen data?

- Does it treat all users fairly?

- Is it reliable in real-world scenarios?

- Can its decisions be trusted and explained?

Importance of AI Evaluation

AI models behave differently from traditional software. Their behavior changes and performance are affected when exposed to new user behavior or data, and they may not always provide predictable outcomes.

Due to these reasons, AI Eval is critical. An AI model that performs exceptionally well during training may fail under new or adverse conditions, or when new data is used to train it.

Practical evaluation of AI models helps organizations to:

- Ensure Model Validity: Organizations can confirm that AI predictions or actions generated align with real-world outcomes.

- Identify Weaknesses: The shortcomings of AI models, such as biases, overfitting, or underperformance, can be detected.

- Build Trust: Users and regulators can be made aware of transparency and accountability to build trust.

- Enable Improvement: Use model retraining and optimization strategies to enhance performance.

AI evals help with setting up benchmarks for performance, monitoring how well AI features hold up over time, and identifying areas for continuous improvement.

In practice, AI evals often consist of:

- Offline testing that uses curated datasets

- Live testing in a sandbox (controlled) environment

- Human-in-the-loop feedback for qualitative insights (as AI falls short of human imagination)

- Continuous monitoring after launching the product to catch unexpected issues

If these evaluations are not performed, there is a risk that AI features launched may fail to deliver the promised value, confuse users, or erode trust.

An AI Evals Example for Product Managers

Let’s say a development team builds a feature that uses AI to summarize customer support tickets automatically. An AI product manager, or data product manager, would need to evaluate whether the AI is performing its job effectively. A strong AI eval wouldn’t just stop at measuring accuracy against a labeled dataset. Instead, it would ask:

- How often do human agents still need to edit these summaries?

- Does this AI model reduce average ticket resolution time?

- Are customers getting faster or better support outcomes as a result?

- Is the AI performing consistently across different types of tickets (length, topic, complexity)?

There is also a need to check for biases, hallucinations (the fabrication of information), and edge cases where the AI might fail in unexpected ways.

With AI eval, you can assess the AI model for all the above aspects and determine its validity, performance, and accuracy.

The Goals of AI Evaluation

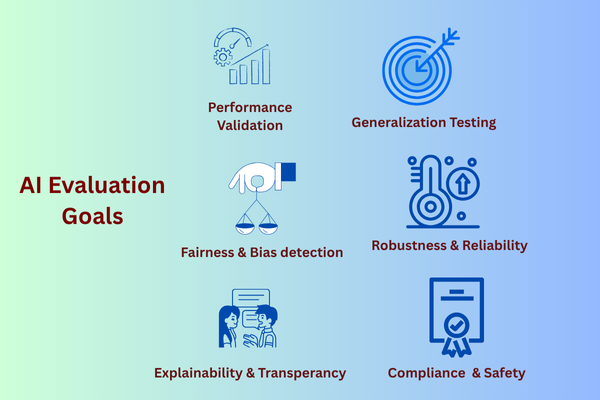

AI evaluation ensures AI models are technically and ethically sound. It serves multiple overlapping goals, including:

Performance Validation

Measuring AI model performance is the most basic function of AI Eval. Performance is measured in terms of accuracy, precision, recall, F1-score, or other quantitative metrics. Validating an AI model for performance ensures the AI system effectively solves the intended problem.

Generalization Testing

An AI model should be able to perform well on unseen data, as well as on the data on which it was initially trained. AI evaluation tests this generalization ability to ensure the model performs well on other data, and does not merely memorize but also learns underlying patterns.

Fairness and Bias Detection

An AI model should behave ethically and treat users with fairness and respect. Evaluation determines that an AI model considers factors such as gender, age, race, or other protected characteristics and exhibits unintended bias based on these factors.

Robustness and Reliability

Evaluation of AI models ensures that models perform well under stressful conditions such as noisy, incomplete, or adversarial inputs. An AI model is expected to be robust enough to provide stability and reliability across different scenarios that often differ from controlled test environments.

Explainability and Transparency

These are the two qualities every AI model should have so that they are comprehensible to humans and various stakeholders understand them.

Compliance and Safety

Evaluating an AI model for compliance with legal and ethical standards is an important goal of AI eval. AI models must be up to date in the context of regulations such as the EU AI Act and the U.S. NIST AI RMF, ensuring that AI systems operate safely and responsibly.

AI Evaluation Types

AI evaluation can be classified based on timing, purpose, and perspective. The following table highlights these types and explains their purpose.

| AI Evaluation Type | Details |

|---|---|

| Pre-Deployment Evaluation |

The following tests are performed for pre-deployment AI evaluation:

As the name suggests, these tests are performed before the model is launched, identifying potential flaws early in the development process.

|

| Post-Deployment Evaluation |

Post-deployment evaluation of the AI model includes:

|

| Quantitative Evaluation |

Quantitative evaluation uses numerical metrics to measure performance. Examples include:

|

| Qualitative Evaluation |

Qualitative evaluation primarily focuses on human judgment and is particularly suited for systems that generate language, images, or creative content. Examples include:

|

| Ethical and Societal Evaluation | Apart from being accurate, modern AI evaluation frameworks assess AI models for their social impact, data ethics, and adherence to responsible AI principles, measuring whether AI contributes positively to society. |

Key Metrics in AI Evaluation

As mentioned earlier, metrics are used in the evaluation of AI. Choosing the right evaluation metric depends on the model type, task, and domain. Here are some commonly used metrics for AI evaluation:

Classification Models

The following metrics are used for classification models:

- Accuracy: Ratio of correctly predicted instances.

- Precision: Correct optimistic predictions out of total positive predictions.

- Recall (Sensitivity): Ability to capture actual positives.

- F1-score: Harmonic mean of precision and recall.

Regression Models

Regression models use the following metrics for AI evaluation:

- Mean Squared Error (MSE): Average squared difference between predictions and actuals.

- Root Mean Squared Error (RMSE): Square root of MSE, interpretable in original units.

- R² Score: Proportion of variance explained by the model.

Generative Models

To evaluate generative AI models, the following metrics are useful:

- BLEU / ROUGE / METEOR: These metrics evaluate natural language generation.

- FID (Fréchet Inception Distance): Measures similarity between generated and authentic images.

- Perplexity: Evaluates fluency and predictability in language models.

Fairness and Bias

Fairness and bias in an AI model are assessed using the following metrics:

- Demographic parity: Equal favorable prediction rates across groups.

- Equal opportunity: Equal, accurate, favorable rates.

- Calibration: Predictions are equally accurate for all demographic groups.

Robustness

For assessing the robustness of an AI model, the following measures are used:

- Adversarial accuracy: Determining the model’s performance under adverse, unusual situations.

- Stability metrics: Assess changes in output resulting from minor variations in input.

Explainability

Explainability of an AI model is measured with:

- SHAP/LIME scores: These scores quantify the influence of each feature.

- Interpretability indices: These indices help to evaluate the clarity of explanations to human users.

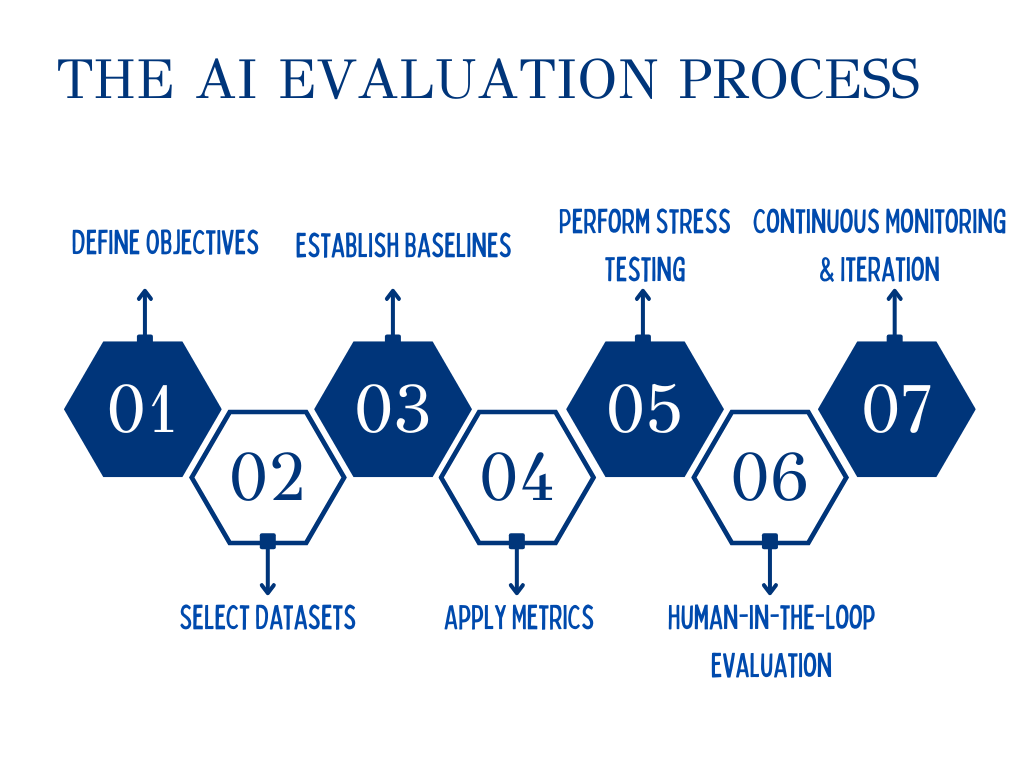

The AI Evaluation Process

A complete AI evaluation process generally looks as follows:

1. Define Objectives

Begin the evaluation process by identifying what you want to measure: accuracy, fairness, or interpretability. Different use cases (e.g., credit scoring vs. image classification) require different criteria. Hence, it is essential to define the objectives of evaluation before you start the assessment.

2. Select Datasets

Utilize sample datasets to assess AI models that accurately reflect the diversity and complexity of real-world data. Divide this data into training, validation, and testing sets to prevent overfitting.

3. Establish Baselines

Set benchmarks or base conditions for comparing model performance. Compare AI performance against these set benchmarks or non-AI baselines (human performance, rule-based systems, etc.). It is not feasible to measure AI model performance or other quantitative metrics without setting a benchmark.

4. Apply Metrics

Depending on the evaluation type, select and calculate relevant metrics suited to the model’s goals and domain, taking into account both quantitative and qualitative indicators.

5. Perform Stress Testing

Test the AI model on extreme or edge cases, such as noisy inputs or rare events, to test its robustness in unusual scenarios.

6. Human-in-the-Loop Evaluation

Human insights remain invaluable, particularly when addressing the nuanced and subjective aspects of AI. Combine automated scoring with human assessments to evaluate subjective qualities, such as empathy, creativity, or trustworthiness.

7. Continuous Monitoring and Iteration

AI models must be continuously tracked for any performance degradation, bias drift, and compliance with evolving standards.

Tools and Frameworks for AI Evaluation

The accelerated adoption of AI has opened a new path for the development of tools and frameworks in modern evaluation.

| Tool/Framework | Description |

|---|---|

| testRigor | This is a no-code AI testing platform that automates the end-to-end testing and evaluation of AI-driven applications, ensuring functionality, consistency, and performance without requiring deep technical expertise. |

| MLflow | An open-source platform that tracks experiments, logs metrics, and manages model versions. |

| TensorBoard | A visualization tool for TensorFlow models to monitor loss curves, metrics, and embeddings. |

| Hugging Face Evaluate | A library that offers standardized metrics for NLP model evaluation, such as BLEU, ROUGE, and accuracy. |

| Google Model Card Toolkit | A toolkit for developers to document and communicate evaluation results transparently. |

| Fairlearn and AIF360 | Frameworks to assess and mitigate bias and fairness issues in AI models. |

Challenges in AI Evaluation

- Lack of Standardization: There is no standard process for evaluating AI, as different organizations and domains use varying metrics and benchmarks according to their specific requirements, making comparisons difficult.

- Dynamic Real-World Data: Data distributions in the real world are never uniform and change over time (data drift), causing model performance to degrade.

- Subjectivity in Evaluation: In tasks such as conversational AI or creative generation, human judgment introduces subjectivity and potential bias.

- Measuring Explainability: It is challenging to quantify interpretability, as human understanding is not easily measurable.

- Ethical and Societal Dimensions: Defining fairness, accountability, and social impact involves not only mathematical considerations but also moral and cultural considerations.

Best Practices for Effective AI Evaluation

- Define success criteria before building or training AI models.

- Utilize diverse and representative datasets to encourage fairness and reduce bias.

- Evaluate the model continuously throughout the process, not just at deployment.

- Include humans in the loop for subjective or ethical insights.

- Document the process thoroughly so that stakeholders can be aware of it.

- Adopt open-source and reproducible frameworks for setting base conditions.

- Align evaluation metrics with business goals, not just technical metrics.

- Monitor real-world customer feedback and update AI models accordingly.

The Future of AI Evaluation

Here are the emerging trends for AI evaluation:

- Autonomous evaluation systems will utilize AI to evaluate AI, thereby making evaluation faster and more scalable.

- Explainable AI (XAI) that makes model decisions more transparent to humans, improving trust and adoption, will be popular.

- Ethical auditing and certification will become mandatory.

- Human-centric metrics will dominate, measuring emotions such as empathy, inclusivity, and impact.

Conclusion

Assessment of AI is not just an exercise in technical prowess. With AI shaping the world, it is crucial that we can assess how appropriate an AI system is by not only seeing how intelligent it is but also its transparency, trustworthiness, and adherence to regulations.