“A great tester is not the one who finds the most bugs, but the one who ensures the right bugs get fixed.” – Cem Kaner.

Testing isn’t simply finding bugs; it is knowing how things work, trying to think as a user, and making sure that everything runs smoothly. It’s about digging deep, challenging assumptions, and finding issues before they become actual problems. A great tester is basically not executing the steps: they’re curious, observant, and one step ahead. Whether verifying edge cases, making sure a smooth user experience, or simply checking that code behaves as expected. A whole industry is focused on keeping software reliable, intuitive, and, most importantly, trustworthy.

Whether you’re just getting started with testing or you’re a seasoned QA guru, this cheat sheet will help guide you through all aspects of manual testing. So let’s get started.

What is Manual Testing?

Manual testing is a process where test cases are executed manually without using any automation tools. It requires domain knowledge, analytical capabilities and test execution techniques. In manual testing, a human tester plays the role of an end user and interacts with the software to check if it functions as expected.

Key Aspects of Manual Testing

- No Automation Involved: Testers execute test cases without using automation tools. Read: Manual vs Automation Testing

- Human Judgment: Testers use intuition, domain knowledge, and real-world scenarios.

- Defect Identification: The primary goal is to find bugs, inconsistencies, and missing features.

- User Perspective Testing: Makes sure that the software meets customer expectations.

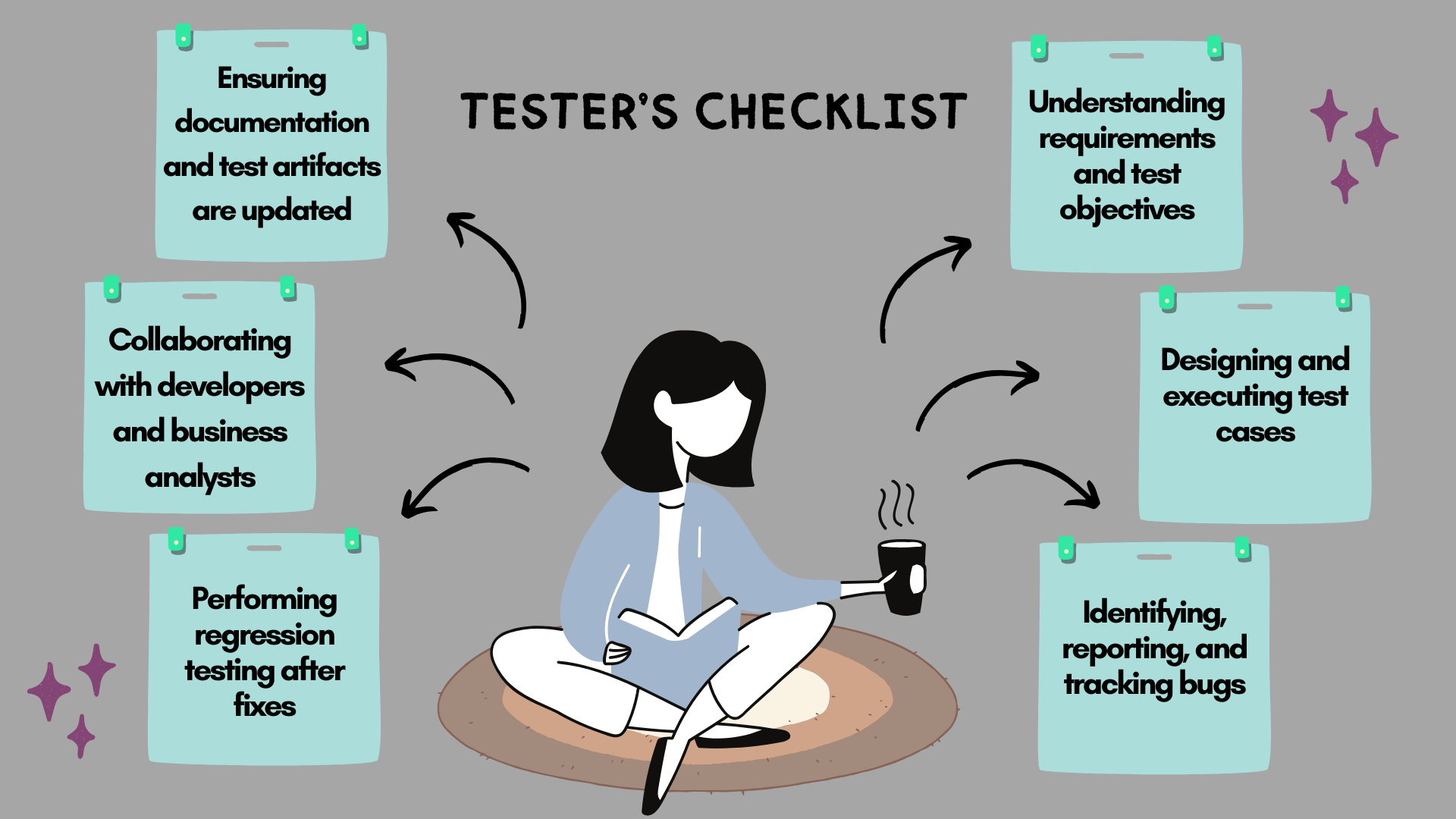

What Does a Tester Do?

A manual tester is responsible for maintaining the software quality through various activities.

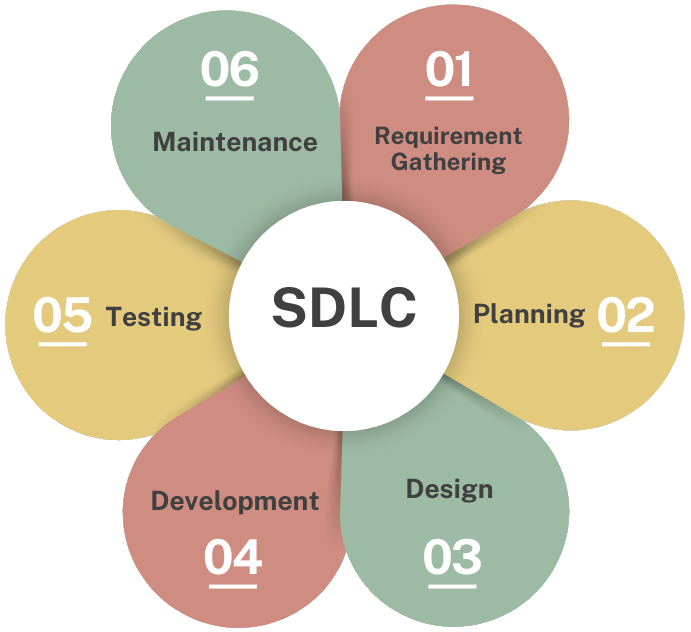

Software Development Life Cycle

The Software Development Life Cycle (SDLC) defines the process of planning, developing, testing, and deploying software.

Phases of SDLC

- Requirement Gathering: Understanding business and user needs.

- Planning: Defining scope, resources, and risk management.

- Design: Creating system architecture and UI/UX design.

- Development: Writing code for the application.

- Testing: Validating the application manually and through automation.

- Maintenance: Updating and improving the software post-release.

Testing is crucial in SDLC to maintain quality, reliability, and performance.

Comparing SDLC Methodologies

There are various models of Software Development Life Cycle (SDLC) that help design the software development process.

| SDLC Model | Description | Pros | Cons |

|---|---|---|---|

| Waterfall Model | A structured sequential manner in which each phase must be finished before moving to the next. |

|

|

| Iterative Model | Software is developed and improved through repeated cycles |

|

|

| Spiral Model | Merges iterative and risk-based methods over many development stages (planning, risk evaluation, design, and assessment). |

|

|

| Agile Model | A flexible and iterative approach where development is broken into small cycles (sprints). |

|

|

| RAD (Rapid Application Development) | A quick (compared to waterfall) approach to development that focuses on prototyping and user feedback. |

|

|

| DevOps | Integrates development and operations teams for continuous development, testing, and deployment. |

|

|

Testing Methods

There are three main methods used in software testing:

| Black Box Testing |

|

|---|---|

| White Box Testing |

|

| Gray Box Testing |

|

Essential Manual Testing Concepts

| Test Case | A structured set of inputs, execution conditions, and expected results is designed to verify a specific feature or functionality of the application. Read: Test Cases vs Test Suites: Understanding the Differences |

|---|---|

| Test Scenario | A high-level description of a test objective that covers multiple test cases to validate a particular workflow or user interaction. |

| Defect/Bug | An error, flaw, or deviation in the software that causes it to behave unexpectedly or incorrectly compared to the specified requirements. |

| Test Plan | A comprehensive document outlining the testing strategy, scope, objectives, resources, schedule, and responsibilities for a testing cycle. Read: Test Plan Template |

| Test Environment | The configured hardware, software, network, and other necessary tools are required to execute test cases in a controlled setting. Read: What is a Test Environment? |

| Severity vs. Priority |

Severity represents the impact of a defect on system functionality.

Priority determines the urgency with which it should be fixed.

|

| DRE (Defect Removal Efficiency) |

Measures the number of defects found internally.

DRE = (Total Defects Found – Total Defects Found in Production) / Total Defects Found

|

| Defect Tags | Descriptive labels are used to categorize and organize defects for easier analysis, retrieval, and filtering. |

Difference between Quality Assurance (QA) and Quality Control (QC)

The following table summarizes the key differences between QA and QC.

| Parameters | Quality Assurance (QA) | Quality Control (QC) |

|---|---|---|

| Objective | QA is a process of providing assurance that the quality requested will be achieved. | QC is a process of fulfilling the quality requested. |

| Technique | QA is the technique of managing quality. | QC is the technique to verify quality. |

| Involved in which phase? | QA is involved during the development phase. | QC is not included during the development phase. |

| Program execution is included? | There is no execution of the program during QA. | QC always includes the execution of the program. |

| Type of tool | QA is a managerial tool. | QC is a corrective tool. |

| Process/ Product-oriented | QA is process-oriented. | QC is product-oriented. |

| Aim | QA aims at preventing defects. | QC identifies and improves the defects. |

| Order of execution | Quality Assurance is performed before Quality Control. | Quality Control is performed after the Quality Assurance activity is done. |

| Technique type | QA is a preventive technique. | QC is a corrective technique. |

| SDLC/ STLC? | QA is responsible for the entire software development life cycle. | QC is responsible for the software testing life cycle. |

| Team | The entire project team is involved. | The project testing team is involved. |

| Time consumption | QA is less time-consuming. | QC is more time-consuming. |

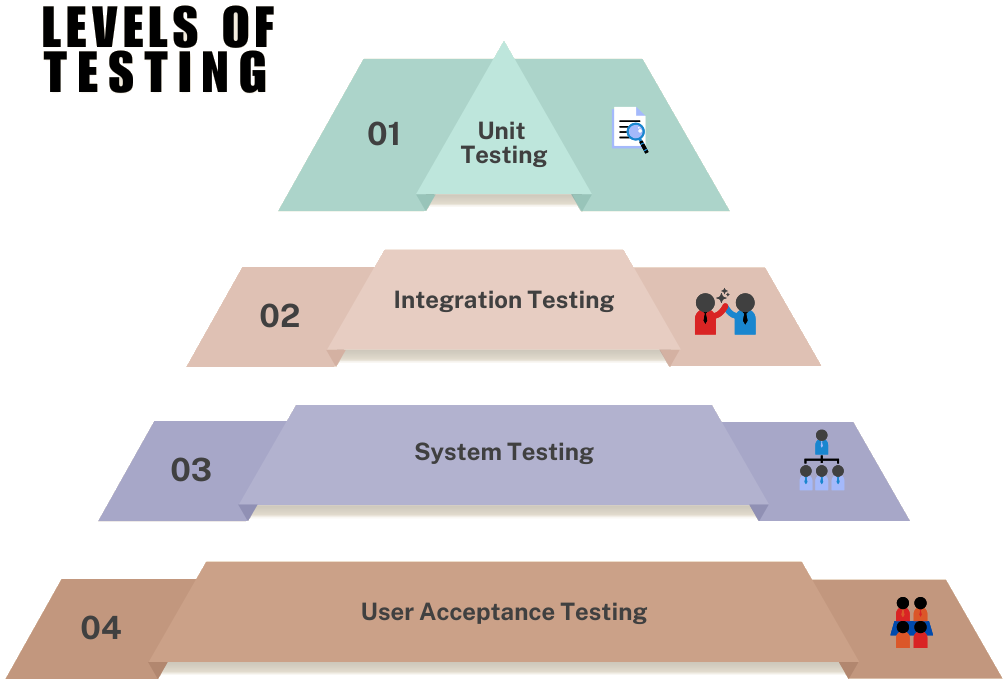

Levels of Testing

Software testing is conducted at different levels to ensure quality.

| Unit Testing |

|

|---|---|

| Integration Testing |

|

| System Testing |

|

| User Acceptance Testing (UAT) |

|

Types of Testing

Manual testing consists of various testing types, each designed to validate different aspects of software functionality, performance, and security.

| Functional Testing | Tests that the application behaves as expected by validating its features against specified requirements. Read: Functional Testing Types |

|---|---|

| Non-Functional Testing | Evaluates aspects like performance, security, and usability to make sure the software meets quality standards beyond just functionality. |

| Smoke Testing | A quick, high-level test to verify that the critical functionalities of the application work before proceeding with more detailed testing. |

| Sanity Testing | A focused check on specific functionalities or recent fixes to confirm they work correctly without conducting a full regression test. |

| Regression Testing | Make sure that new changes, bug fixes, or enhancements do not negatively impact previously working functionality. Read: What is Regression Testing? |

| Exploratory Testing | Performed without predefined test cases, relying on tester intuition and experience to uncover defects. |

| Ad-hoc Testing | An unstructured testing approach where testers randomly explore the application to find unexpected issues. |

| Performance Testing | Assess the application’s speed, responsiveness, and stability under different conditions to identify performance bottlenecks. Read: Performance Testing Types |

| Security Testing | To check robust security measures, examine the system for vulnerabilities, unauthorized access, and data breaches. |

| Accessibility Testing | Test that the application is usable for individuals with special needs, following standards like WCAG for inclusivity. |

Test Case Design Techniques

| Equivalence Partitioning | A technique that divides input data into valid and invalid partitions to minimize the number of test cases while having maximum test coverage. |

|---|---|

| Boundary Value Analysis | It focuses on testing input values at the extreme edges (minimum, maximum, and just outside limits) to detect boundary-related defects. |

| Decision Table Testing | Uses a tabular format to represent different input conditions and their corresponding expected outputs, making sure that all possible scenarios are tested. |

| State Transition Testing | Evaluates the system’s behavior by testing valid and invalid state changes to verify that transitions between states function correctly. |

| Use Case Testing | Validates software functionality based on real-world user interactions and workflows to make sure that theapplication meets business requirements. |

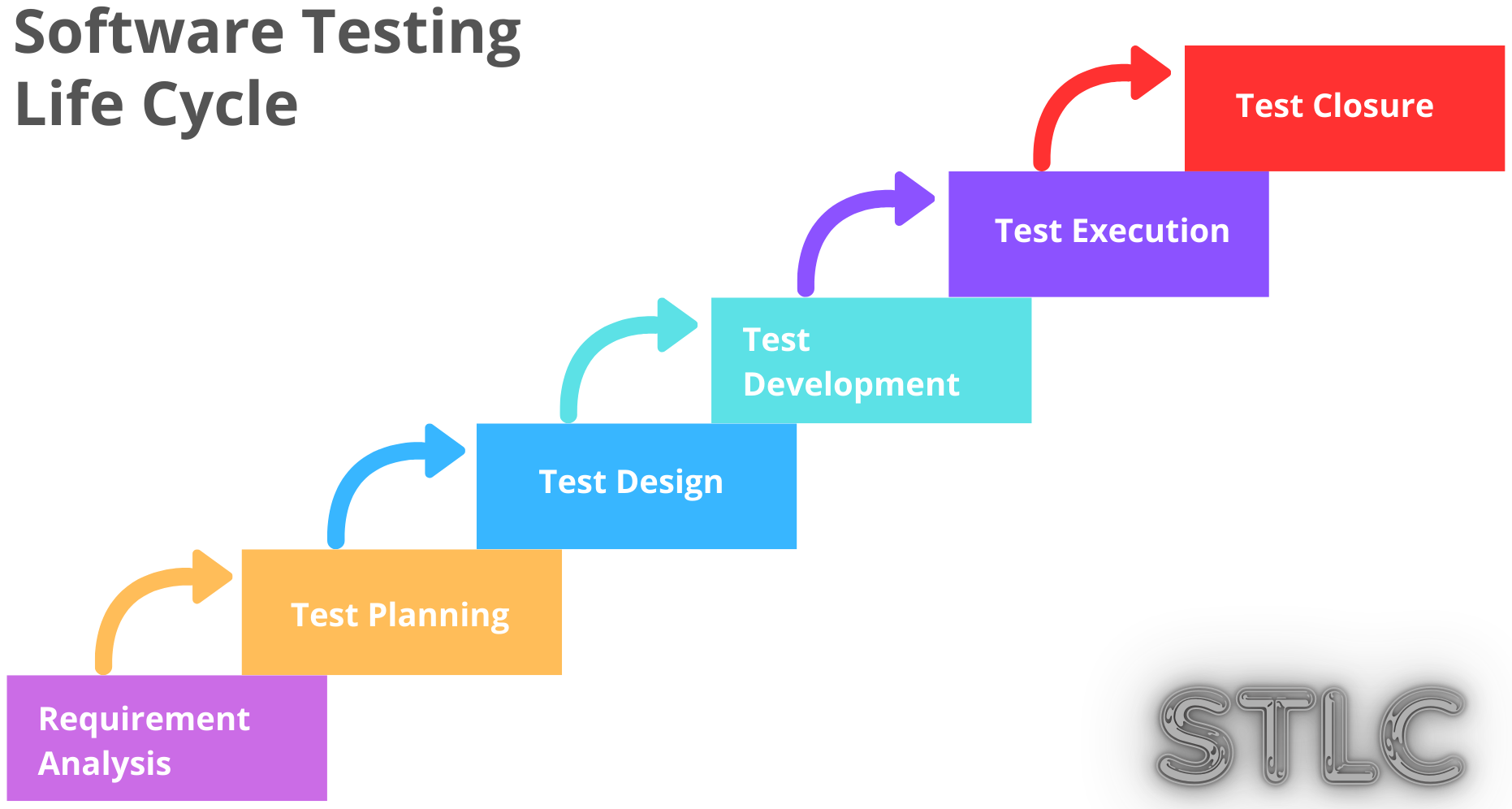

Software Testing Life Cycle

| Requirement Analysis | Involves understanding the project requirements and defining the test scope to ensure all functionalities are covered. |

|---|---|

| Test Planning | Focuses on creating the test strategy, defining test objectives, estimating resources, and scheduling test activities. |

| Test Case Development | Involves writing detailed test cases, preparing test data, and defining expected outcomes based on requirements. |

| Test Environment Setup | Prepares the necessary hardware, software, network configurations, and access permissions to execute tests. |

| Test Execution | Running test cases, comparing actual vs. expected results, and logging defects for resolution. |

| Test Closure | Finalizing documentation, analyzing test metrics, preparing closure reports, and discussing lessons learned for future improvements. |

Error vs. Bug vs. Defect vs. Fault vs. Failure

Software testing is mainly performed to identify errors, deficiencies, or missing requirements in the application. It is important because if there are any errors or bugs in the application, they can be identified and fixed before the application is released.

In software testing and development, the terms “error”, “defect”, “bug”, “fault”, and “failure” are related but have distinct meanings. These terms are explained below:

Error

An error is a mistake made by a developer, programmer or any other human involved in the development of an application. Typos, logical errors, or incorrect assumptions are all errors.

- Errors occur because of wrong logic, problematic syntax or loops, or typing mistakes.

- Error is identified by comparing actual results with the expected results.

Bug

A bug is an informal term for defect and used to describe a situation where the software product or application does not work as per the requirements. When the bug is present, the application breaks.

- A bug once identified can be reproduced with the help of standard bug-reporting templates.

- The bugs that may cause user dissatisfaction are considered major bugs and have to be fixed on priority.

- Application crash is the most common type of bug.

Defect

A defect is a flaw or a problem in the software that causes it to deviate from its expected behavior.

- A defect can affect the whole program.

- A defect represents the inability of the application to meet the criteria. It prevents the application from performing its desired work.

- Defects occur when a developer makes mistakes during the development.

Fault

Fault is the term used to describe an error that occurs due to certain factors such as lack of resources or specific steps not followed properly.

- A fault is an undesirable situation that occurs mainly due to invalid documented steps or a lack of data definitions.

- It is an unintentional behavior by an application program and causes a warning to the program.

- If left unchecked, a fault, however minor, may lead to failure in the application.

Failure

Failure is the inability of a system or application to perform its intended function within specified criteria and limits. When defects go undetected, failure occurs, resulting in the application not working properly.

- Failure can also be an accumulation of several defects over time.

- The system or application may become unresponsive as a result of failure.

- Failures are usually detected by end users.

Bug Life Cycle

In software testing, the bug life cycle is the process a bug follows from its initial discovery to its resolution and closure. Bug life cycle is a systematic way of managing defects or bugs, ensuring they are detected, reported, documented, tracked, and fixed.

Here are the steps that are followed in a typical bug life cycle.

- Detect Defect: The bug is discovered during testing and reported by the tester, usually including details like the steps to reproduce it and its severity.

- Report Defect: The QA team evaluates the severity and impact of the bug to determine its priority. The bug is assigned to a developer who will be responsible for fixing it.

- Resolve Defect: The developer addresses the bug and creates a fix. The tester verifies the fix and confirms that the bug is resolved.

- Re-test Defect: If the bug is found to still exist after the initial fix, it is re-assigned to the developer for further investigation and resolution.

- Close Defect: Once verified as fixed, the bug is closed.

Bug Status Cycle

A bug status cycle consists of different statuses a bug assumes from its initial reporting to its resolution and closure. Bug status cycle helps in tracking the progress of fixing a bug and ensuring effective management of defects.

The following are different stages in a bug status cycle:

- New: The bug is reported for the first time and documented. It may be in a system like Jira.

- Assigned: The bug is assigned to a developer team for analysis and resolution.

- Open: The bug is being resolved by the developer.

- Fixed: The bug fix has been implemented, and the bug is marked resolved.

- Pending retest: The bug is marked as fixed but is awaiting retesting by the tester.

- Retest: The fix is verified by the tester to see if the issue is resolved and the fix is working as expected.

- Verified: If the fix is retested successfully, the bug is marked as verified.

- Reopened: If it is found during retesting that the fix is not working as expected, the bug is reopened, and the developer team is notified.

- Closed: Once the bug fix is verified and the code is confirmed to be free of defects, it is marked as closed.

- Duplicate: If the same bug is reported multiple times, it is marked as a duplicate to avoid duplicate work.

- Deferred: The bug is acknowledged, but its resolution is postponed due to low priority or other reasons.

- Rejected: If a reported bug is not a true bug or considered for enhancement, the bug is marked rejected.

- Not a defect: If a bug is a feature or a minor issue that does not impact the core functionality, the bug is marked Not a Defect.

Test Metrics

Testing metrics are quantitative measures used to assess the efficiency, effectiveness, and quality of the software testing process. They provide insights into test coverage, defect density, and overall test performance.

Test Execution Metrics

| Test Case Execution Percentage |

|

|---|---|

| Test Case Pass Rate |

|

| Test Case Failure Rate |

|

| Test Coverage |

|

Defect Metrics

| Defect Density |

|

|---|---|

| Defect Leakage |

|

| Defect Severity Index (DSI) |

|

Test Efficiency and Productivity Metrics

| Test Efficiency |

|

|---|---|

| Test Efficiency |

|

Test Artifacts

Test artifacts are essential documents created during different phases of the Software Testing Life Cycle (STLC) to maintain proper planning, execution, tracking, and reporting of testing activities.

| Test Artifact | Description | Created During |

|---|---|---|

| Test Plan | A document outlining the test strategy, scope, objectives, schedule, resources, and risks. | Test Planning |

| Test Cases | Detailed step-by-step test scripts specifying inputs, expected results, and pass/fail criteria. | Test Case Development |

| Test Scenarios | High-level test descriptions covering multiple test cases based on requirements. | Test Case Development |

| Test Data | Input data is used to execute test cases, including valid, invalid, and boundary conditions. | Test Case Development |

| Requirement Traceability Matrix (RTM) | A mapping document linking test cases to their corresponding requirements to ensure full coverage. | Test Case Development |

| Test Environment Setup Document | Specifies hardware, software, network configurations, and access credentials required for testing. | Test Environment Setup |

| Test Execution Report | A daily/weekly summary of executed test cases, pass/fail status, and any blockers. | Test Execution |

| Defect Report | A detailed log of identified defects, including steps to reproduce, severity, priority, and status. | Test Execution |

| Bug Report | Captures information about a specific defect, including actual vs. expected results and supporting screenshots/logs. | Test Execution |

| Test Summary Report | A final document summarizes the testing process, test execution results, defect status, and overall quality assessment. | Test Closure |

| Lessons Learned Document | A post-testing review capturing challenges, best practices, and recommendations for future projects. | Test Closure |

Conclusion

Manual testing is an indispensable part of software quality assurance. Mastering it requires understanding testing types, writing effective test cases, and applying best practices. Combining manual and automation testing ensures robust software quality.